TCP socket - SO_SNDBUF

I came across some code that after connecting a non-blcoking TCP socket did

int sndsize = 2048;

setsockopt (socket, SOL_SOCKET, SO_SNDBUF, &sndsize, sizeof (int));The socket was then used to send video data. So in other words;

alot of data and it was designed to use as much bandwidth as possible within a maximum threshold.

Since the socket was non-blocking, data got buffered in userspace before delivered to kernel when the socket became writable.

The man page describing SO_SNDBUF in man 7 socket states:

SO_SNDBUF

Sets or gets the maximum socket send buffer in bytes. The ker-

nel doubles this value (to allow space for bookkeeping overhead)

when it is set using setsockopt(), and this doubled value is

returned by getsockopt(). The default value is set by the

wmem_default sysctl and the maximum allowed value is set by the

wmem_max sysctl. The minimum (doubled) value for this option is

2048.

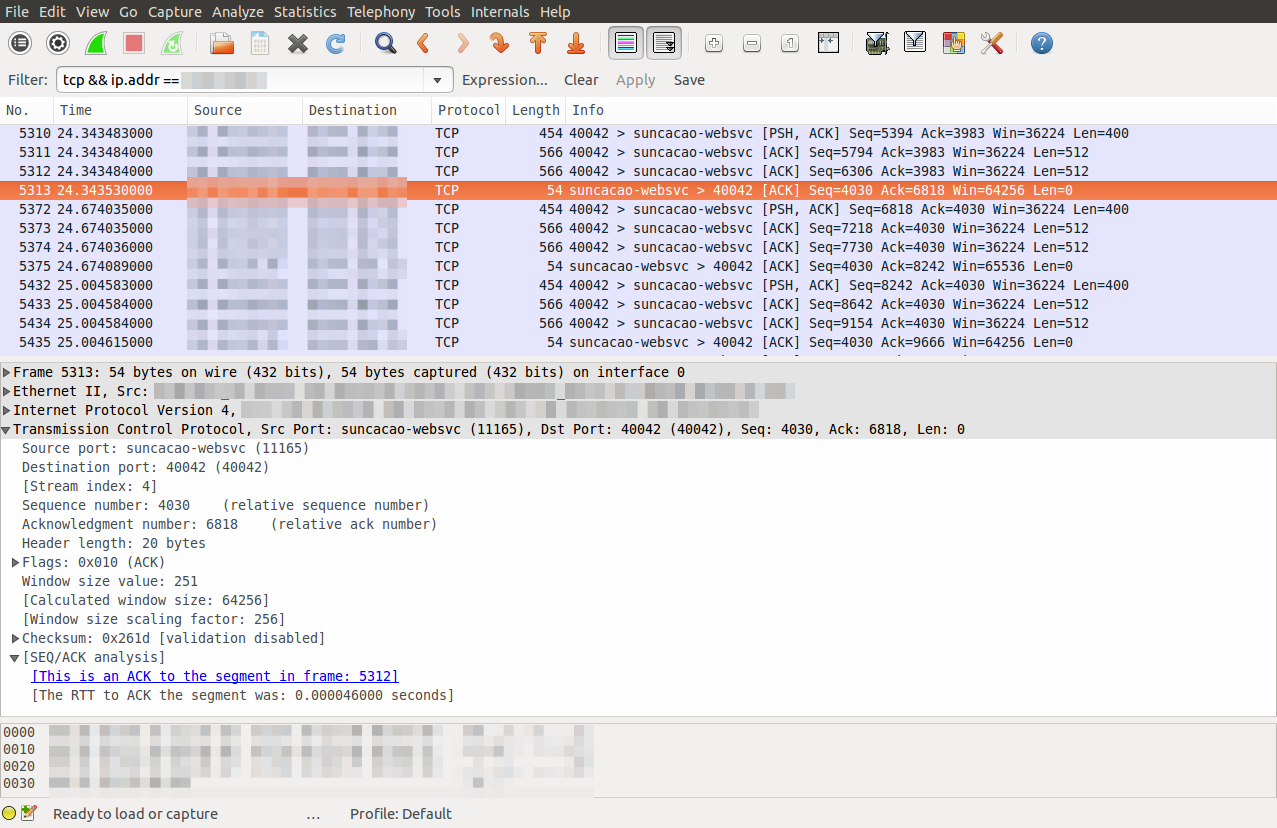

Nevertheless, lowering the socket send buffer to 2K actually resulted in an non-optimal transport:

- Small packets being sent over the connection

- Very low bandwidth being utilized

Obviously more IO events are generated by the kernel, as calling send() on the socket quickly would

return EAGAIN/EWOULDBLOCK. However, I didn’t expect the kernel to divide TCP segments to a maximum

of 512 bytes TCP data.

After inspecting wireshark and packet timestamps, it would seem like the sender would pause sending for

fractions of a second periodically.

I could see ACKs coming back very fast, but there would be a pause between the ACK received and the

next packet being sent out.

I guess that somehow the kernel and the application couldn’t handle the quantity of ping pongs between

the kernel and userspace due to the low send buffer at the same time as ACKs generated by the receive side.

I haven’t investigated too much, so if you have an explanation, please enlighten me!

Nevertheless, removing the setting of SO_SNDBUF, and relying on defaults obviously solved the issue.

Looking at wireshark now showed a more typical flow of data.

Now the connection would ensure flow control as you would expect and TCP segments were closer to what was

advertised as MSS and close to the MTU of the network.